In a previous post, Preparing for the internet of things, extreme scalability, real-time event handling, and time-to-insight were given as the three biggest challenges for Big Data and the Internet of Things. These are challenges that can be addressed now if companies are ready to invest and be proactive about what’s surely coming in the next few years.

In a previous post, Preparing for the internet of things, extreme scalability, real-time event handling, and time-to-insight were given as the three biggest challenges for Big Data and the Internet of Things. These are challenges that can be addressed now if companies are ready to invest and be proactive about what’s surely coming in the next few years.

Process more at the edge

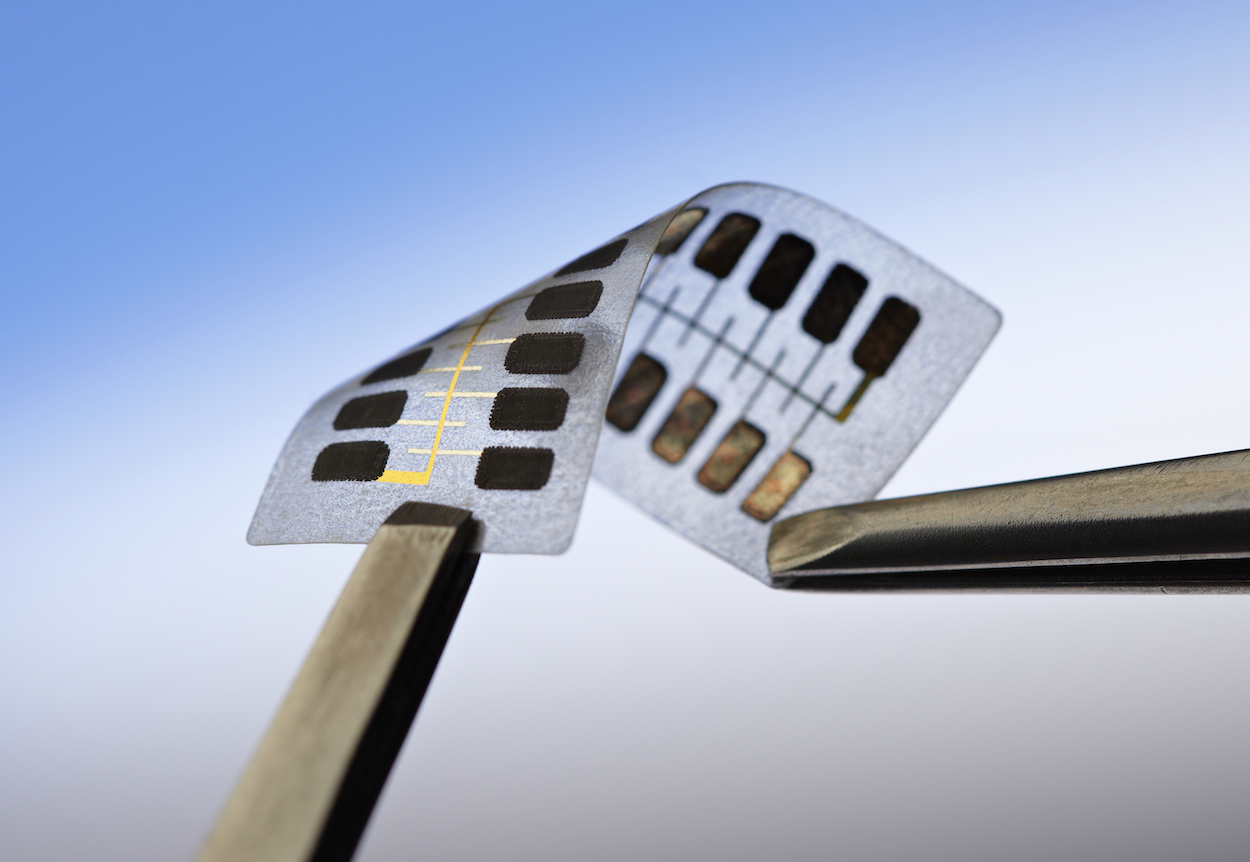

Extreme scalability is a problem partly for the need to create ever larger means to direct and move data. Alongside other computing advances, the ability to perform computing work has grown more dense, allowing many of the devices at the edge, where data is being created and consumed, despite very small physical size, to filter and apply rules to data before it comes back to any central data store. The edge is where the real challenge of Big Data starts and until now, has been more of a data vacuum.

Consider home health monitoring, where there’s a potential to gather and transmit every single heartbeat and blood pressure reading back to medical staff. This would be overwhelming and inefficient. Instead, with processing at the edge, real-time event processing filters information to decide what’s urgent and what can be sent periodically as summarized data. Filtering is one of the most important aspects of managing data from the Internet of Things.

Evaluate events using Big Data analytics

The Internet of Things requires that we evaluate events using the best Big Data analytics we can find. Visualization through self-service tools is the new black. Once we know what we’re looking for, discreet events can be fed into predictive models and correlated against current (like inventory levels) or past events (like historical transactions) for automated decision making. Making decisions quicker is critical to successfully managing the Internet of Things. While some companies are doing this today, they remain in the minority.

Faster time to insight

Currently, Big Data is primarily processed in Hadoop’s HDFS and Hive, which requires custom applications and scripts. That combination requires a rare blending of traditional analytics skills and software development skills and this is exactly why we hear how rare data scientists are. To be successful, user-friendly tools need to break that paradigm and make processing Big Data a common occurrence managed by common workers. Success with the Internet of Things depends on this shift.

The outcome of successfully moving to agile tools that can add new data, combine new data with existing sources and ask new questions (not just pre-canned ones) is faster time to insight. Business savvy workers performing unscripted exploration is a serious competitive advantage in the new age. Faster time to insight requires rich, compelling, interactive visualization that not only shows hidden patterns in data, but allows the user to ask follow-on questions of data.

Platforms will rule

It’s unlikely the mainstream enterprise will tackle the Internet of Things challenge with custom development. it’s far more likely they’ll integrate using optimized platforms that provide the plumbing that connect existing back ends with powerful gateways that talk to the edge of the network where the sensors and users sit. This infrastructure needs to grow flexibly alongside the data that it manages, allowing everything to scale to meet the requirements of the Internet of Things.

All Things D

All Things D ARS Technica

ARS Technica Engadget

Engadget GigaOM

GigaOM Mashable

Mashable TechCrunch

TechCrunch The Verge

The Verge Venture Beat

Venture Beat Wired

Wired Chris Brogan

Chris Brogan Brian Solis

Brian Solis Chris Dixon

Chris Dixon Clay Shirky Blog

Clay Shirky Blog HBR Blog

HBR Blog IT Redux

IT Redux Jeremiah Owyang

Jeremiah Owyang Radar O'Reilly

Radar O'Reilly Seth Godin Blog

Seth Godin Blog SocialMedia Today

SocialMedia Today Solve for Interesting

Solve for Interesting The TIBCO Blog

The TIBCO Blog Lifehacker

Lifehacker